Welcome to the first part of a series of tutorials about Directed Probabilistic Graphical Models (PGMs) & Variational methods. Directed PGMs (OR, Bayesian Networks) are very powerful probabilistic modelling techniques in machine learning literature and have been studied rigorously by researchers over the years. Variational Methods are family of algorithms arise in the context of Directed PGMs when it involves solving an intractable integrals. Doing inference on a set of latent variables (given a set of observed variables) involves such an intractable integral. Variational Inference (VI) is a specialised form of variation method that handles this situation. This tutorial is NOT for absolute beginners as I assume the reader to have basic-to-moderate knowledge about Random Variables, probability theories and PGMs. The next tutorial in this series will cover one perticular VI method, namely “Variational Autoencoder (VAE)” built on top of VI.

A review of Directed PGMs

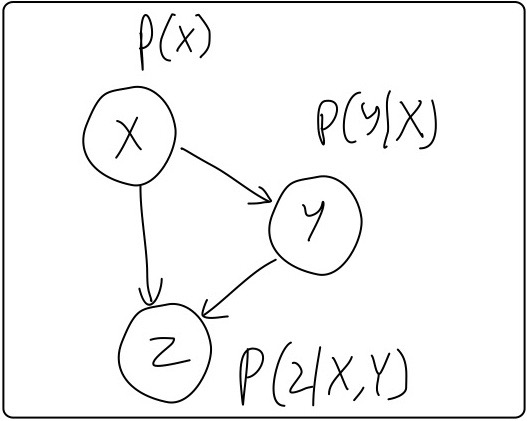

A Directed PGM, also known as Bayesian Network, is a set of random variables (RVs) associated with a graph structure (DAG) expressing conditional independance (CI assumptions) among them. Without the CI assumptions, one had to model the joint distribution over all the RVs, which would’ve been difficult. Fig. 1 shows a typical DAG expressing the conditional independance among the set of participating RVs

In general, joint distribution over a set of RVs

Where,

Ancestral sampling

A key idea in Directed PGMs is the way we sample from them. We use something known as Ancestral Sampling. Unlike joint distributions over all random variables (

So, in the example depicted in Fig. 1

So, we get one sample as

Parameterization and Learning

Now, as we have a clean way of representing a complicated distribution in the form of a graph structure, we can parameterize each individual distribution in the factorized form to parameterize the whole joint distribution. Parameterizing the distribution in the example in Fig. 1

For convenience, we will write the above factorization as

For learning, we require a set of data samples (i.e., a dataset) collected from an unknown data generating distribution

The likelihood of the data samples under our model signifies the probability that the samples came from our model. It is simply

The goal of learning is to get a good estimate of

Inference

Inference in a Directed PGM refers to estimating a set of RVs given another set of RVs in a graph

In case a deterministic answer is desired, one can figure out the expected value of

A generative view of data

Its quite important to understand this point. In this section, I won’t tell you anything new per se, but repeat some of the things I explained in the Ancestral Sampling subsection above.

Given a finite set of data (i.e., a dataset), we start our model building process from asking ourselves one question, “How my data could’ve been generated ?”. The answer to this question is precisely “our model”. The model (i.e., the graph structure) we build is essentially our belief of how the data was generated. Well, we might be wrong. The data may have been generated by some other ways, but we always start with a belief - our model. The reason I started modelling my data with a graph structure shown in Fig.1 is because I believe all my data (i.e,

Or, equivalently

Expectation-Maximization (EM) Algorithm

EM algorithm solves the above problem. Although, this tutorial is not focused on EM algorithm, I will give a brief idea about how it works. Remember where we got stuck last time ? We didn’t have

The Expectation (E) step estimates

where

And then, the Maximization (M) step plugs that

By repeating the E & M steps iteratively, we can get an optimal solution for the parameters and eventually discover the latent factors in the data.

The intractable inference problem

Apart from the learning problem, which involves estimating the whole joint distribution, there exists another problem that is worth solving on its own - the inference problem, i.e., estimating the latent factor given an observation. For examples, we may want to estimate “pose” of an object given its image in an unsupervised way, OR, estimating identity of a person given his/her facial photograph (our last example). Although we have seen how to perform inference in the EM algorithm, I am rewriting it here for convenience.

Taking up the same example of latent variable (i.e.,

This quantity is also called the posterior.

For continuos

If you are a keen observer, you might notice an appearent problem with the inference - the inference will be computationally intractable as it involves a summation/integration over a high dimensional vector with potentially unbounded support. For example, if the latent variable denotes a continuous “pose” vector of length

Variational Inference (VI) comes to rescue

Finally, here we are. This is the one alogrithm I was most excited to explain because this is what some of the ground-breaking ideas of this field born out of. Variational Inference (VI), although there in the literature for a long time, has recently shown very promising results on problems involving latent variables and deep structure. In the next post, I will go into some of those specific algorithms, but not today. In this article, I will go over the basic framework of VI and how it works.

The idea is really simple: If we can’t get a tractable closed-form solution for

Let the approximation be

By choosing a family of distribution

Now let’s expand the KL-divergence term

Although we can compute the first two terms in the above expansion, but oh lord ! the third term is the same annoying (intractable) integral we were avoiding before. What do we do now ? This seems to be a deadlock !

The Evidence Lower BOund (ELBO)

Please recall that our original objective was a minimization problem over

Because the third term is independent of

Or equivalently, maximize (just flip the two terms)

This term, usually defined as ELBO, is quite famous in VI literature and you have just witnessed how it looks like and where it came from. Taking a deeper look into the

Now, please consider looking at the last equation for a while because that is what all our efforts led us to. The last equation is totally tractable and also solves our problem. What it basically says is that maximizing

There is one more interpretation (see figure 5) of the KL-divergence expansion that is interesting to us. Rewriting the KL-expansion and substituting

As we know that

So, the

Okay ! Way too much math for today. This is overall how the Variational Inference looks like. There are numerous directions of research emerged from this point onwards. Its impossible to talk about all of them. But few directions, which succeded to grab attention of the community with its amazing formulations and results will be discussed in later parts of the tutorial series. One of them being “Variational AutoEncoder” (VAE). Stay tuned.

References

- “Variational Inference: A Review for Statisticians”, David M. Blei, Alp Kucukelbir, Jon D. McAuliffe

- “Pattern Recognition and Machine Learning”, C.M. Bishop

- “Machine Learning: A Probabilistic Perspective”, Kevin P. Murphy