Abstract

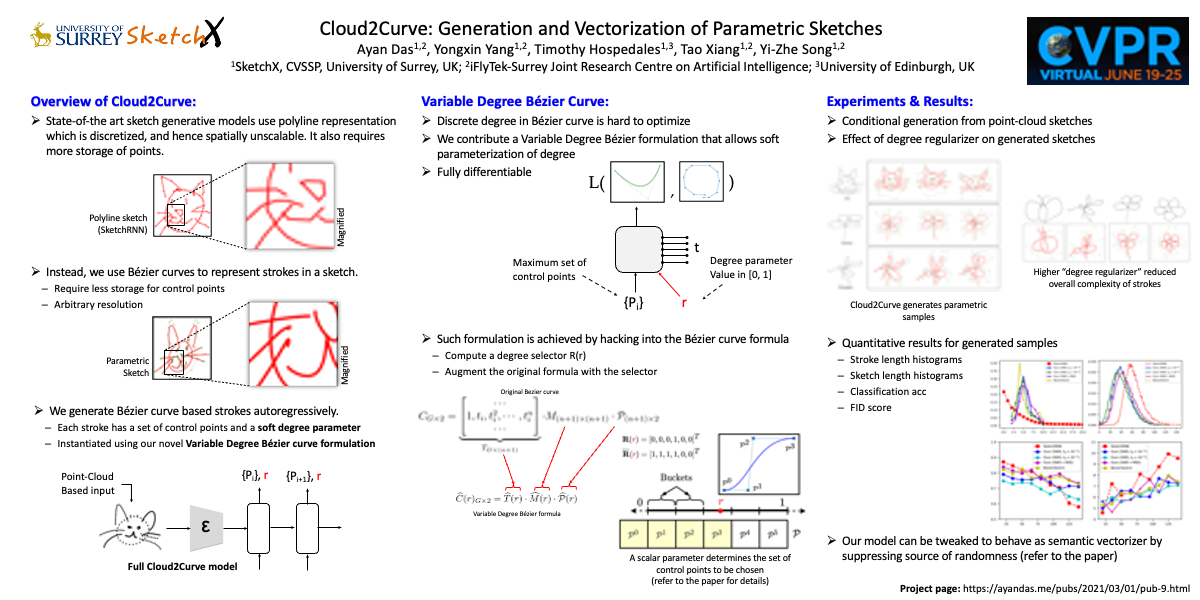

Analysis of human sketches in deep learning has advanced immensely through the use of waypoint-sequences rather than raster-graphic representations. We further aim to model sketches as a sequence of low-dimensional parametric curves. To this end, we propose an inverse graphics framework capable of approximating a raster or waypoint based stroke encoded as a point-cloud with a variable-degree Bézier curve. Building on this module, we present Cloud2Curve, a generative model for scalable high-resolution vector sketches that can be trained end-to-end using point-cloud data alone. As a consequence, our model is also capable of deterministic vectorization which can map novel raster or waypoint based sketches to their corresponding high-resolution scalable Bézier equivalent. We evaluate the generation and vectorization capabilities of our model on Quick, Draw! and K-MNIST datasets.

Citation

@inproceedings{das2021,

author = {Das, Ayan and Yang, Yongxin and Hospedales, Timothy and

Xiang, Tao and Song, Yi-Zhe},

title = {Cloud2Curve: {Generation} and {Vectorization} of {Parametric}

{Sketches}},

booktitle = {Computer Vision and Pattern Recognition (CVPR), 2021},

date = {2021-03-01},

url = {https://openaccess.thecvf.com/content/CVPR2021/papers/Das_Cloud2Curve_Generation_and_Vectorization_of_Parametric_Sketches_CVPR_2021_paper.pdf},

langid = {en}

}