Asbtract

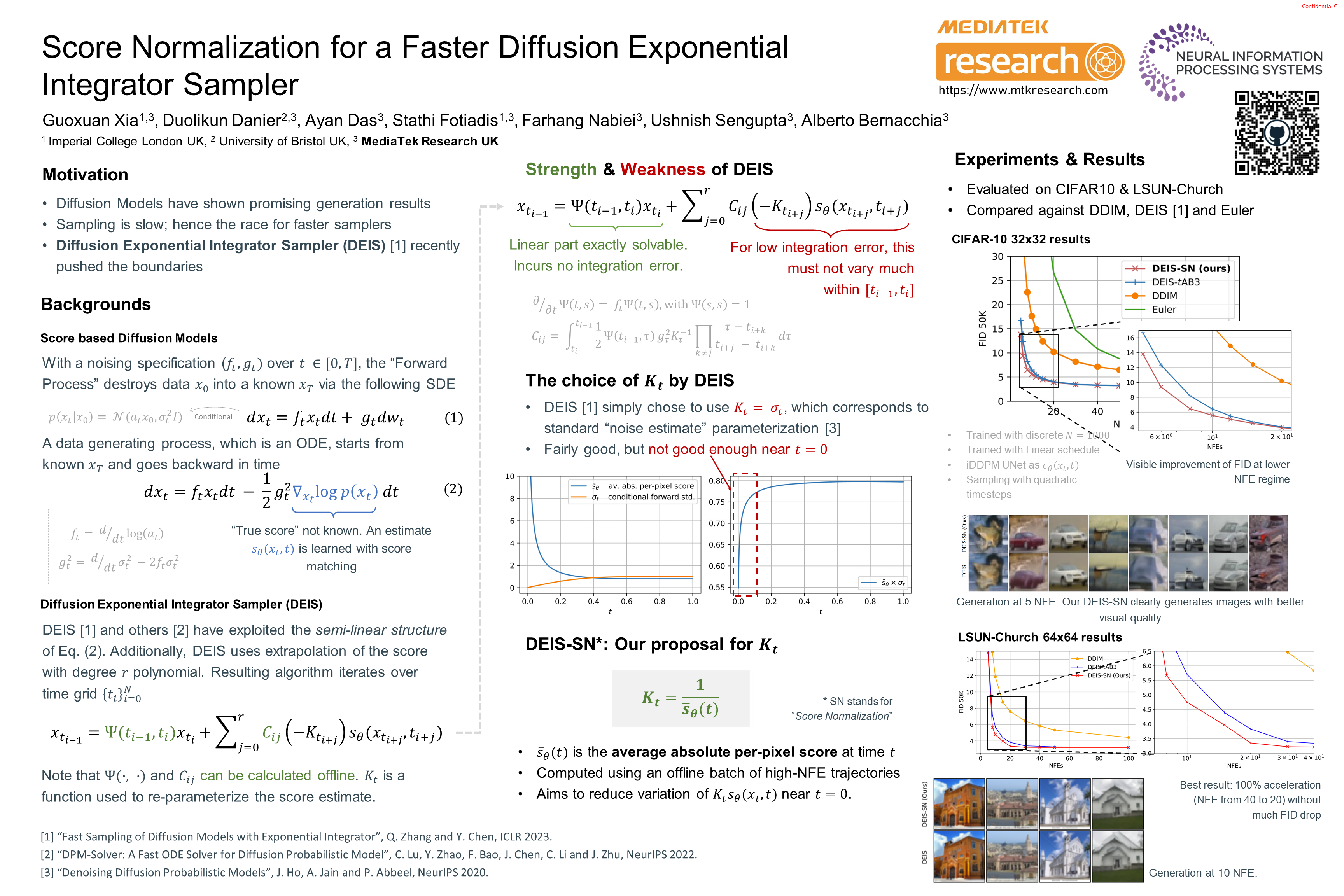

Recently, Zhang and Chen (2023) have proposed the Diffusion Exponential Integrator Sampler (DEIS) for fast generation of samples from Diffusion Models. It leverages the semi-linear nature of the probability flow ordinary differential equation (ODE) in order to greatly reduce integration error and improve generation quality at low numbers of function evaluations (NFEs). Key to this approach is the score function reparameterisation, which reduces the integration error incurred from using a fixed score function estimate over each integration step. The original authors use the default parameterisation used by models trained for noise prediction – multiply the score by the standard deviation of the conditional forward noising distribution. We find that although the mean absolute value of this score parameterisation is close to constant for a large portion of the reverse sampling process, it changes rapidly at the end of sampling. As a simple fix, we propose to instead reparameterise the score (at inference) by dividing it by the average absolute value of previous score estimates at that time step collected from offline high NFE generations. We find that our score normalisation (DEIS-SN) consistently improves FID compared to vanilla DEIS, showing an FID improvement from 6.44 to 5.57 at 10 NFEs for our CIFAR-10 experiments.

Citation

@inproceedings{xia2023,

author = {Xia, Guoxuan and Danier, Duolikun and Das, Ayan and

Fotiadis, Stathi and Nabiei, Farhang and Sengupta, Ushnish and

Bernacchia, Alberto},

title = {Score {Normalization} for a {Faster} {Diffusion}

{Exponential} {Integrator} {Sampler}},

booktitle = {NeurIPS 2023 Workshop on Diffusion Models},

date = {2023-11-01},

url = {https://openreview.net/forum?id=AQvPfN33g9},

langid = {en}

}