Abstract

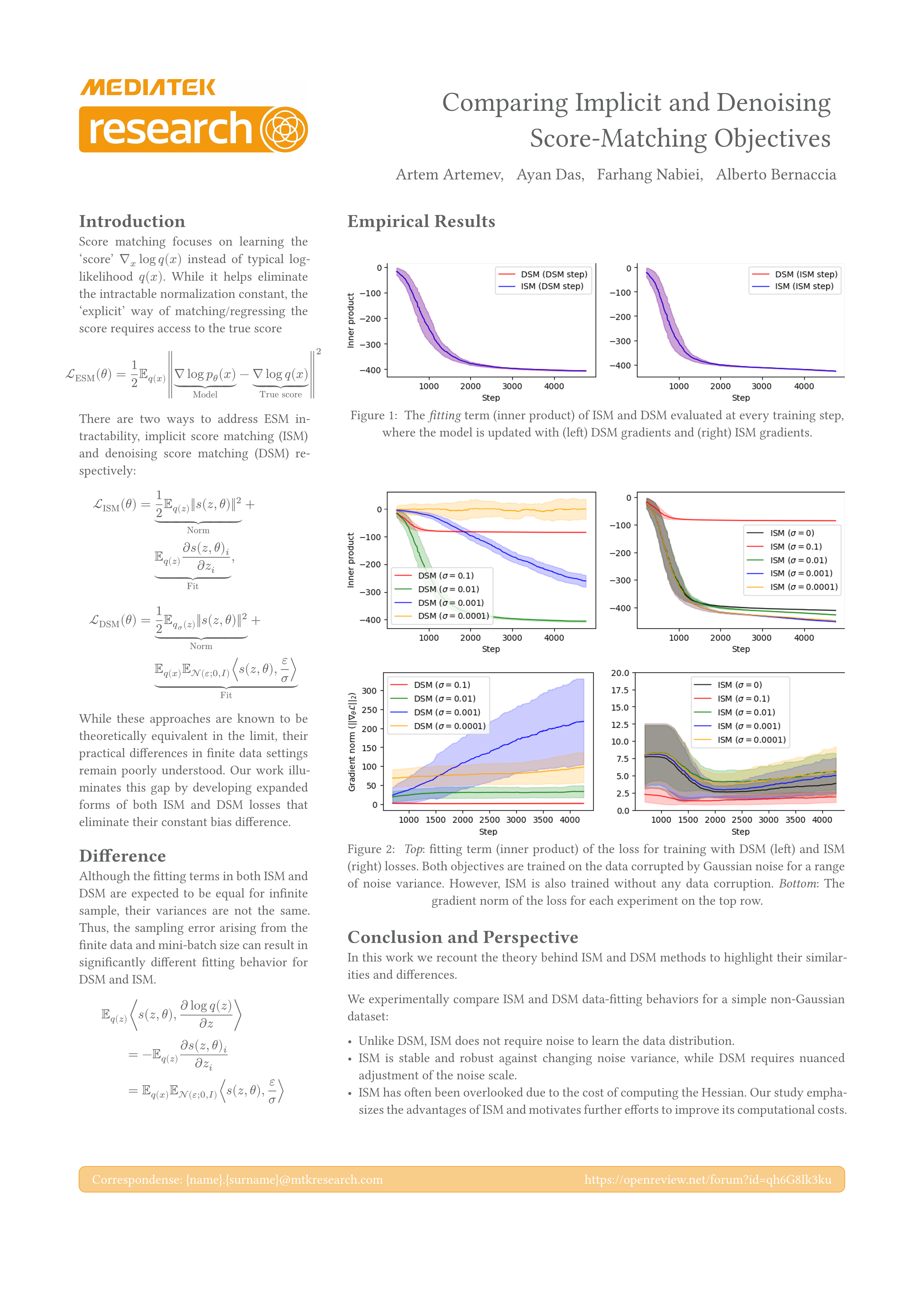

Score estimation has led to several state-of-the-art generative models, particularly in computer vision. Compared to maximum likelihood, one of the key advantages of score estimation is that it does not require the calculation of a normalization factor. However, explicit score matching necessitates knowledge of the true score of the data distribution, which is typically unavailable. To address this challenge, various approaches have been proposed to approximate the score-matching loss. The two main approaches are implicit score matching (ISM) and denoising score matching (DSM), which differ in their bias, making direct comparison difficult. In this work, we expand the ISM and DSM losses to remove the constant bias between them. While it is known that they are asymptotically equivalent, we show empirically that, in finite data regimes, differences in variance make DSM loss sensitive to the noise scale. ISM does not require noised data to learn and is more robust in the noisy data setting than DSM, particularly when the noise scale is relatively small.

Citation

@inproceedings{artemev2024,

author = {Artemev, Artem and Das, Ayan and Nabiei, Farhang and

Bernacchia, Alberto},

title = {Comparing {Implicit} and {Denoising} {Score-Matching}

{Objectives}},

booktitle = {NeurIPS 2023 Workshop on Mathematics of Modern Machine

Learning},

date = {2024-12-01},

url = {https://openreview.net/forum?id=qh6G8Ik3ku},

langid = {en}

}