We talked extensively about Directed PGMs in my earlier article and also described one particular model following the principles of Variational Inference (VI). There exists another class of models conveniently represented by Undirected Graphical Models which are practiced relative less than modern methods of Deep Learning (DL) in the research community. They are also characterized as Energy Based Models (EBM), as we shall see, they rely on something called Energy Functions. In the early days of this Deep Learning renaissance, we discovered few extremely powerful models which helped DL to gain momentum. The class of models we are going to discuss has far more theoretical support than modern day Deep Learning, which as we know, largely relied on intuition and trial-and-error. In this article, I will introduce you to the general concept of Energy Based Models (EBMs), their difficulties and how we can get over them. Also, we will look at a specific family of EBM known as Boltmann Machines (BM) which are very well known in the literature.

Undirected Graphical Models

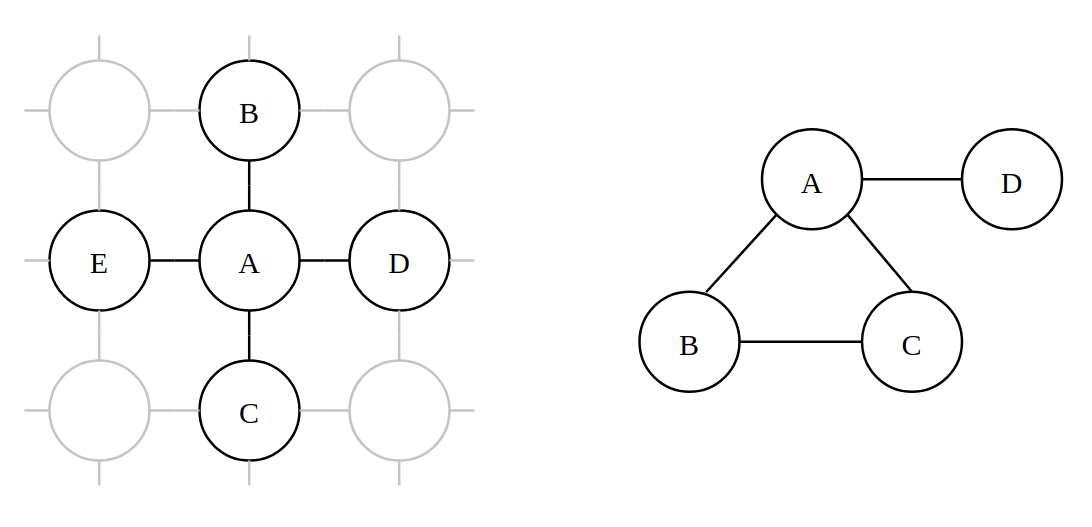

The story starts when we try to model a number of Random Variables (RVs) in the graph but we only have a weak knowledge about which variables are related but not the direction of influence. Direction is a necessary requirement for Directed PGMs. For example, let’s consider a lattice of atoms (Fig.1(a)) where only neighbouring atoms influence the spins but it is unclear what the direction of the influences are. For simplicity, we will use a simpler model (Fig.2(b)) for demonstration purpose.

We model a set of random variables

Just like every other model in machine learning, the potential functions can be parameterized, leading to

Semantically, potentials denotes how likely a given state is. So, higher the potential, more likely that state is.

Reparameterizing in terms of Energy

When we are defining a model, however, it is inconvenient to choose a constrained (non-negative valued) parameterized function. We can easily reparameterize each potential function in terms of energy functions

The

Such reparameterization affects the semantics of Eq.1 as well. Putting Eq.2 into Eq.1 yields

Here we defined

All this is fine .. well .. unless we need to do things like sampling, computing log-likelihood etc. Then our energy-based parameterization fails because its not easy to incorporate an un-normalized function into probabilistic frameworks. So we need a way to get back to probabilities.

Back to Probabilities

The obvious way to convert un-normalized potentials of the model to normalized distribution is to explicitely normalize Eq.3 over its domain

This is the probabilistic model implicitely defined by the enery functions over the whole state-space. [We will discuss Boltzmann Distribution. Here’s what the Wikipedia says:

In statistical mechanics and mathematics, a Boltzmann distribution (also called Gibbs distribution) is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state’s energy …

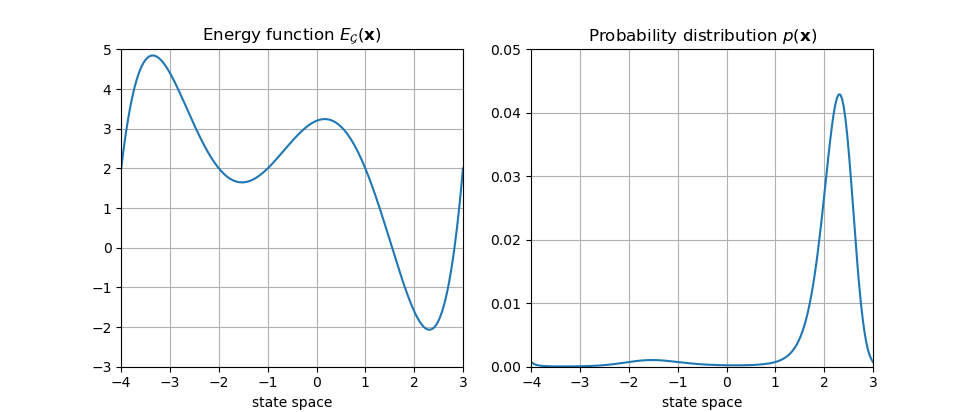

From now on, Eq.4 will be the sole connection between energy-space and probability-space. We can now forget about potential functions. A 1-D example of an energy function and the corresponding probability distribution is shown below:

The denominator of Eq.4 is often known as the “Partition Function” (denoted as

A hyper-parameter called “temperature” (denoted as

A general learning algorithm

The question now is - how do I learn the model given a dataset ? Let’s say my dataset has

Computing gradient w.r.t. parameters yields

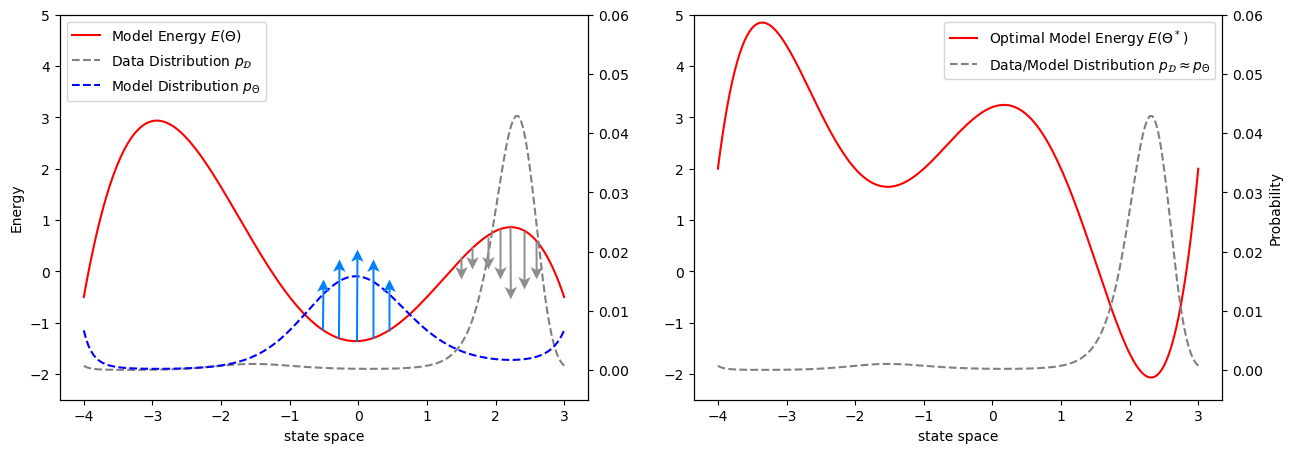

Take a few minutes to digest Eq.5. That’s a very important result. It would be worth discussing it a bit further. The first term in Eq.5 is often known as the “Positive Phase” and the second term as “Negative Phase”. The only difference between them, as you can see, is in the distributions on which the expectations are taken. The first expectation is on the data distribution - essentially picking up data from our dataset. The second expectation is on the model distribution - sampling from the model with current parameters. To understand their semantic interpretation, we need to see them in isolation. For the sake of explanation, consider both terms separately yielding a parameter update rule

The first update rule basically tries to changes the parameters in a way it can minimize the enrgy function at points coming from data. And the second one tries to maximize (notice the difference in sign) the energy function at points coming from the model. The original update rule (combining both of them) have both of these effects working simulteneously. The minima of the loss landscape occurs when our model discovers the data distribution, i.e.

Whatever may be the interpretation, as I mentioned before that the denominator of

Gibbs Sampling

As we saw in the last section, the only difficulty we have in implementing Eq.5 is not being able to sample from an intractable density (Eq.4). It tuns out, however, that the conditional densities of a small subset of variables given the others is indeed tractable in most cases. That is because, for conditionals, the

I excluded the parameter symbols for notational brevity. That summation in denominator is not as scary as the one in Eq.4 - its only on one variable. We take advantage of this and wisely choose a sampling algorithms that uses conditional densities. Its called Gibbs Sampling. Well, I am not going to prove it. You either have to take my words OR read about it in the link provided. For the sake of this article, just believe that the following works.

To sample

- We have a sample from last iteration

- We then pick one variable

We can start this process by setting

Now that we have pretty much everything needed, let’s explore some popular model based on the general principles of EBMs.

Boltzmann Machine

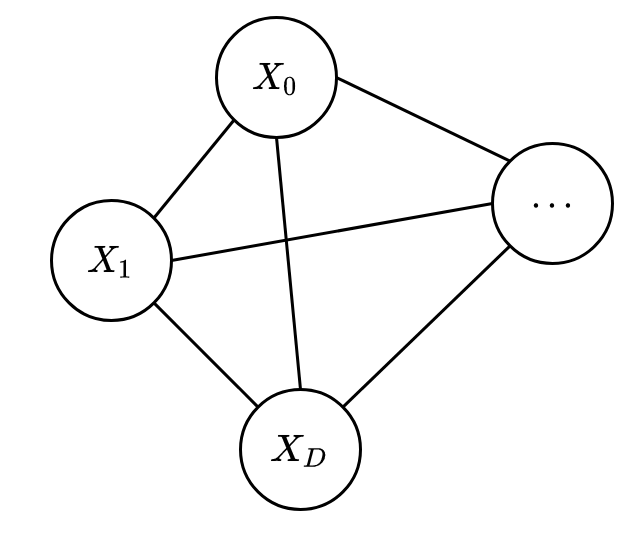

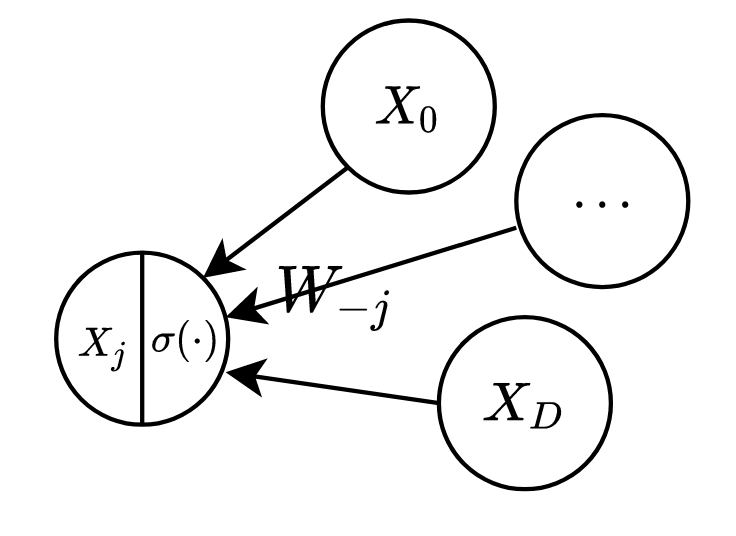

Boltzmann Machine (BM) is one particular model that has been in the literature for a long time. BM is the simplest one in its family and is used for modelling a binary random vector

By design, BM has a fully connected graph and hence only one maximal clique (containing all RVs). The energy function used in BM is possibly the simplest one you can imagine:

Upon expanding the vectorized form (reader is encouraged to try), we can see each term

If fire together, then wire together

How do we learn this model then ? We have already seen the general way of computing gradient. We have

Equipped with Gibbs sampling, it is pretty easy to implement now. But my description of the Gibbs sampling algorithm was very general. We have to specialize it for implementing BM. Remember that conditional density we talked about (Eq.6) ? For the specific energy function of BM (Eq.7), that has a very convenient and tractable form:

where

Boltzmann Machine with latent variables

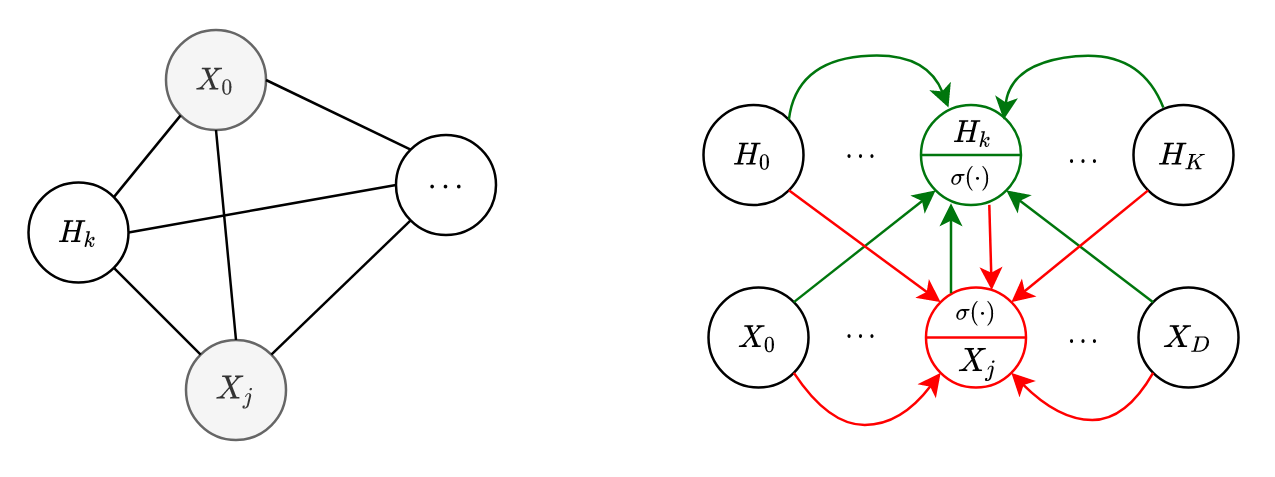

To add more expressiveness in the model, we can introduce latent/hidden variables. They are not observed, but help explaining the visible variables (see my Directed PGM article). However, all variables are still fully connected to each other (shown below in Fig.6(a)).

[ A little disclaimer that as we have already covered a lots of founding ideas, I am going to go over this a bit faster. You may have to look back and find analogies with our previous formulations ]

Suppose we have

The motivation for such energy function is very similar to original BM. However, our probabilistic form of the model is no longer Eq.4, but the marginalized joint distribution.

It might look a bit scary, but its just marginalized over the hidden state-space. Very surprisingly though, the conditionals have pretty similar forms as original BM:

Hopefully the notations are clear. If they are not, try comparing with the ones we used before. I recommend the reader to try proving it as an exercise. The diagram in Fig.6(b) hopefully adds a bit more clarity. It shows a similar computation graph for the conditionals we saw before in Fig.5.

Coming to the gradients, they also takes similar forms as original BM .. only difference is that now we have more parameters

If you are paying attention, you might notice something strange .. how do we compute the terms

Basically, we sample a visible data from our dataset and use the conditional to sample a hidden vector. We fix the visible vector and them sample from the hidden vector one component at a time (using Gibbs sampling).

For jointly sampling a visible and hidden vector from the model (for negative phase), we also use Gibbs sampling just as before. We sample all of visible and hidden RVs component by component starting the iteration from any random values. There is a clever hack though. What we can do is we can start the Gibbs iteration by fixing the visible vector to a real data from our dataset (and not anything random). Turns out, this is extremely useful and efficient for getting samples quickly from the model distribution. This algorithm is famously known as “Contrastive Divergence” and has long been used in practical implementations.

“Restricted” Boltzmann Machine (RBM)

Here comes the all important RBM, which is probably one of the most famous energy based models of all time. But, guess what, I am not going to describe it bit by bit. We have already covered enough that we can quickly build on top of them.

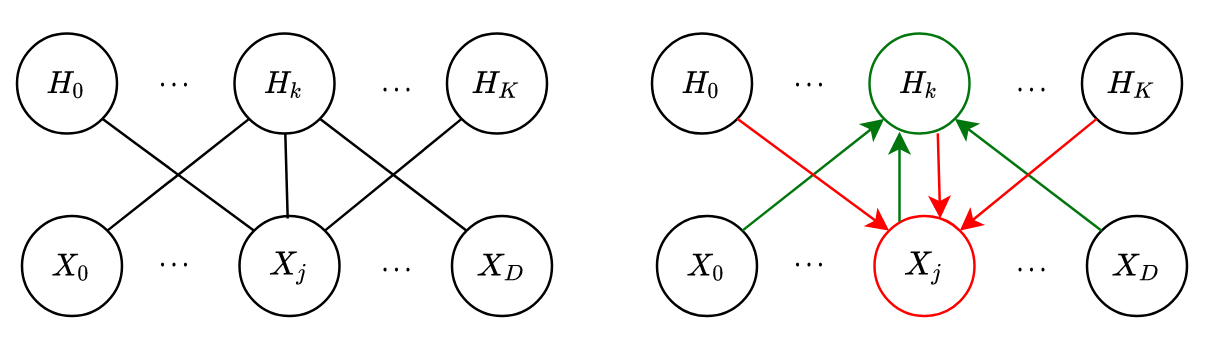

RBM is basically same as Boltzmann Machine with hidden units but with one big difference - it doesn’t have visible-visible AND hidden-hidden interactions, i.e.

If you do just that, Boooom ! You get RBMs (see its graphical diagram in Fig.7(a)). It makes the formulation much simpler. I am leaving it entirely for the reader to do majority of the math. Just get rid of

Let me point you out one nice consequence of this model: the conditionals for each visible node is independent of the other visible nodes and this is true for hidden nodes as well.

That means they can be computed in parallel

Moreover, the Gibbs sampling steps become super easy to compute. We just have to iterate the following steps:

- Sample a hidden vector

- Sample a visible vector

This makes RBM an attractive choice for practical implementation.

Whoahh ! That was a heck of an article. I encourage everyone to try working out the RBM math more rigorously by themselves and also implement it in a familiar framework. Alright, that’s all for this article.

References

Citation

@online{das2020,

author = {Das, Ayan},

title = {Energy {Based} {Models} {(EBMs):} {A} Comprehensive

Introduction},

date = {2020-08-13},

url = {https://ayandas.me/blogs/2020-08-13-energy-based-models-one.html},

langid = {en}

}