Generative modelling is one of the seminal tasks for understanding the distribution of natural data. VAE, GAN and Flow family of models have dominated the field for last few years due to their practical performance. Despite commercial success, their theoretical and design shortcomings (intractable likelihood computation, restrictive architecture, unstable training dynamics etc.) have led to the developement of a new class of generative models named “Diffusion Probabilistic Models” or DPMs. Diffusion Models, first proposed by Sohl-Dickstein et al. (2015), inspire from thermodynam diffusion process and learn a noise-to-data mapping in discrete steps, very similar to Flow models. Lately, DPMs have been shown to have some intriguing connections to Score Based Models (SBMs) and Stochastic Differential Equations (SDE). These connections have further been leveraged to create their continuous-time analogous. In this article, I will describe both the general framework of DPMs, their recent advancements and explore the connections to other frameworks. For the sake of readers, I will avoid gory details, rigorous mathematical derivations and use subtle simplifications in order to maintain focus on the core idea.

In case you haven’t checked the first part of this two-part blog, please read Score Based Models (SBMs).

What exactly do we mean by “Diffusion” ?

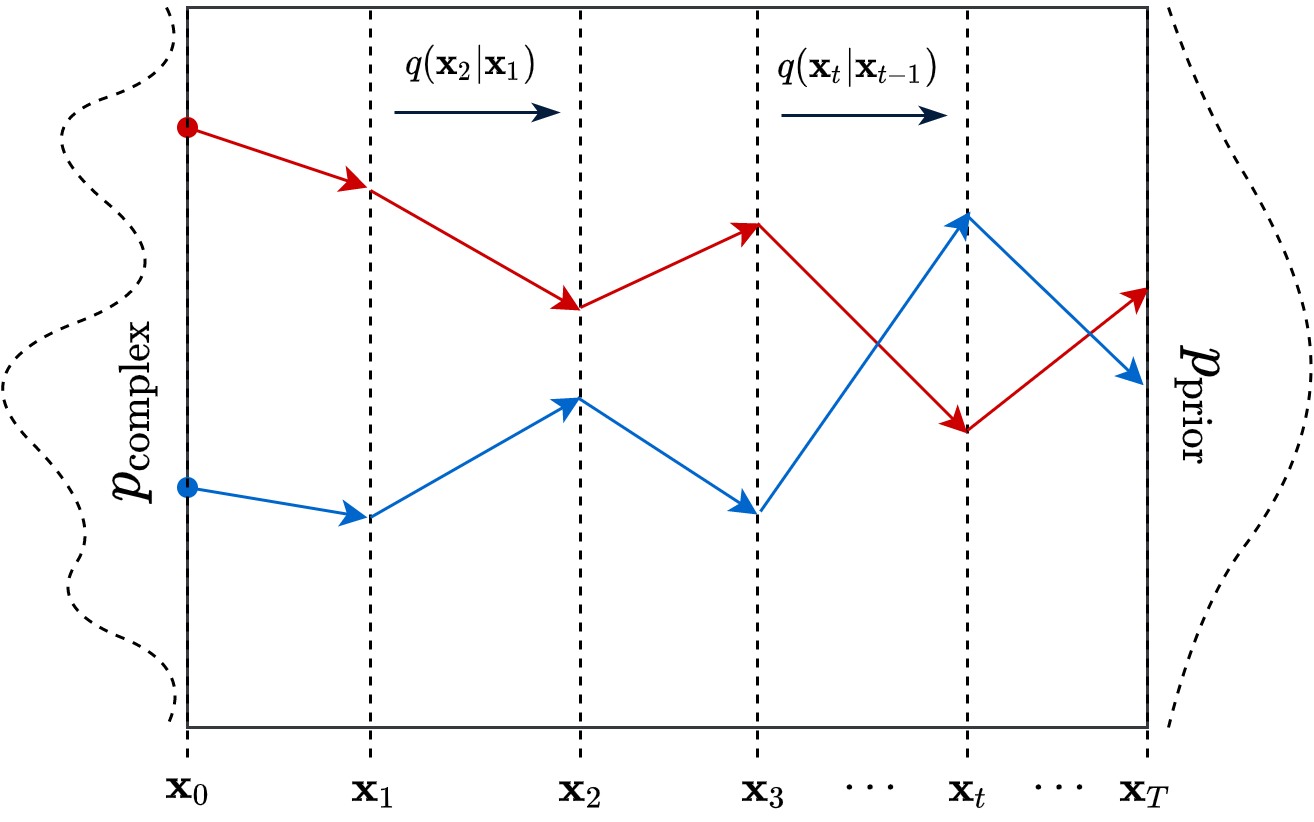

In thermodynamics, “Diffusion” refers to the flow of particles from high-density regions towards low-density regions. In the context of statistics, meaning of Diffusion is quite similar, i.e. the process of transforming a complex distribution

where the symbol

We can related our original diffusion process in Equation 1 with a markov chain by defining

From the properties of stationary distribution, we have

So far, we confirmed that there is indeed an iterative way (refer to Equation 2) to convert the samples from a complex distributions to a known (simple) prior. Even though we talked only in terms of generic densities, there is one very attractive choice of

For obvious reason, its known as Gaussian Diffusion. I purposefuly changed the notations of the random variables to make it more explicit.

Generative modlling by undoing the diffusion

We proved the existence of a stochastic transform

This process is responsible for “destructuring” the data and turning it into an isotropic gaussian (almost structureless). Please refer to the figure below (red part) for a visual demonstration.

However, this isn’t very usefull by itself. What would be useful is doing the opposite, i.e. starting from isotropic gaussian noise and turning it into

Fortunately, the theroy of markov chain gurantees that for gaussian diffusion, there indeed exists a reverse diffusion process

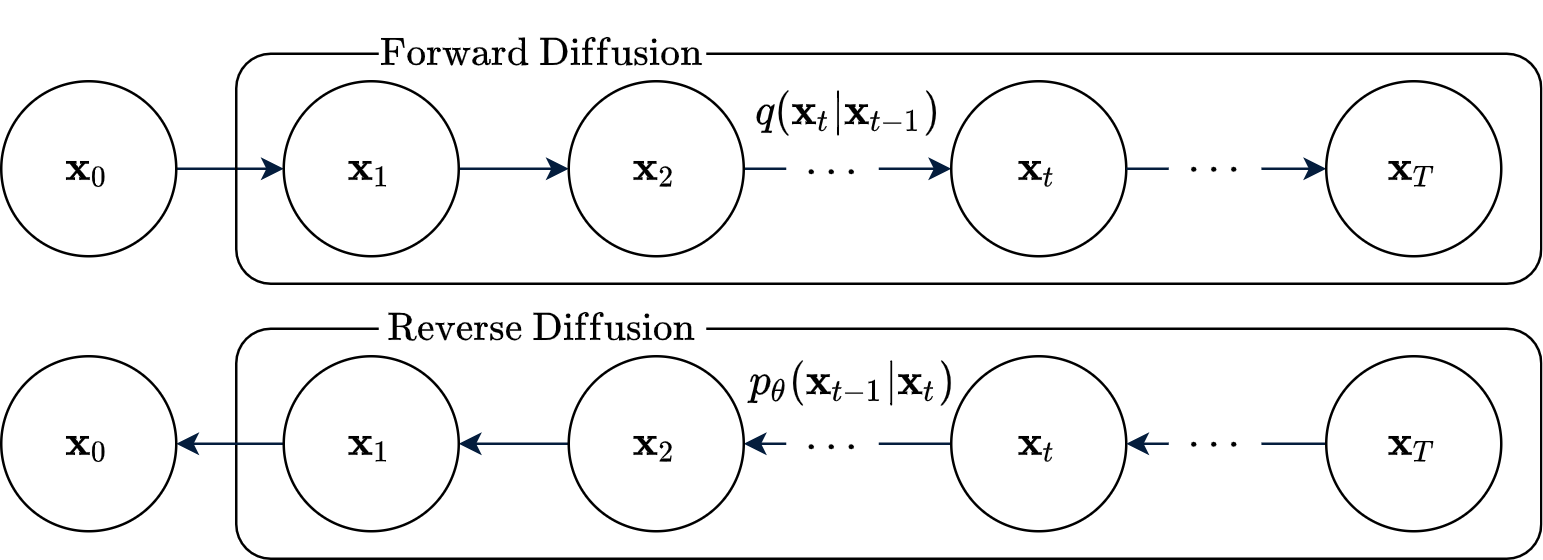

Graphical model and training

The stochastic “forward diffusion” and “reverse diffusion” processes described so far can be well expressed in terms of Probabilistic Graphical Models (PGMs). A series of

Each of the reverse conditionals

which isn’t quite computable in practice due to its dependance on

which is easy to compute and optimize. The expectation is over the joint distribution of the entire forward process. Getting a sample

Further simplification: Variance Reduction

Even though we can train the model with the lower-bound shown above, few more simplifications are possible. First one is due to Sohl-Dickstein et al. (2015) and in an attempt to reduce variance. Firstly, they showed that the lower-bound can be further simplified and re-written as the following

There is a subtle approximation involved (the edge case of

The exact form (refer to Equation 7 of Ho, Jain, and Abbeel (2020)) is not important for holistic understanding of the algorithm. Only thing to note is that

- Use the closed form of

- Expand the KL divergence formula

- Convert

.. and arrive at a simpler form

Further simplification: Forward re-parameterization

For the second simplification, we look at the forward process in a bit more detail. There is an amazing property of the forward diffusion with gaussian noise - the distribution of the noisy sample

This is a consequence of the forward process being completely known and having well-defined probabilistic structure (gaussian noise). By means of (gaussian) reparameterization, we can also derive an easy way of sampling any

As a result,

This is the final form that can be implemented in practice as suggested by Ho, Jain, and Abbeel (2020).

Connection to Score-based models (SBM)

Ho, Jain, and Abbeel (2020) uncovered a link between Equation 7 and a particular Score-based models known as Noise Conditioned Score Network (NCSN) (Song and Ermon 2019). With the help of the reparameterized form of

The above equation is a simple regression with

The expression in red can be discarded without any effect in performance (suggested by Ho, Jain, and Abbeel (2020)). I have further approximated the expectation over time-steps with sample average. If you look at the final form, you may notice a surprising resemblance with Noise Conditioned Score Network (NCSN) (Song and Ermon 2019). Please refer to

- The time-steps

- The expectation

- Just like NCSN, the regression target is the noise vector

- Just like NCSN, the learnable model depends on the noisy sample and the time-step (or scale).

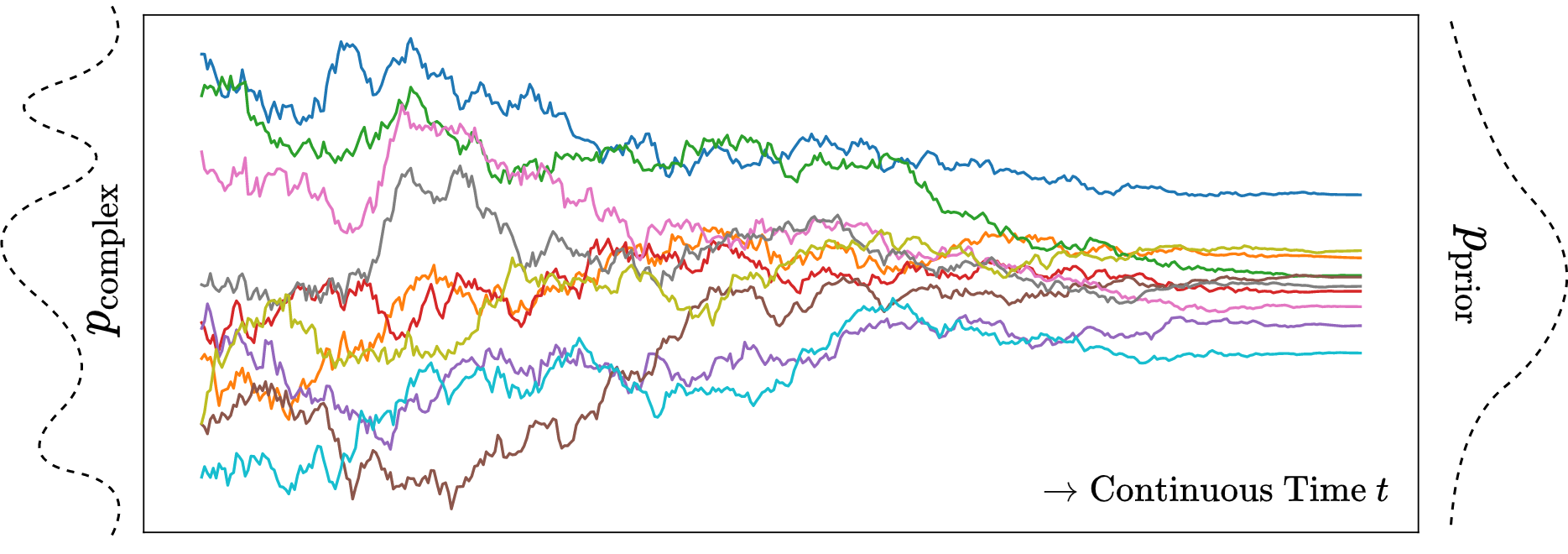

Infinitely many noise scales & continuous-time analogue

Inspired by the connection between Diffusion Model and Score-based models, Song, Kingma, et al. (2021) proposed to use infinitely many noise scales (equivalently time-steps). At first, it might look like a trivial increase in number of steps/scales, but there happened to be a principled way to achieve it, namely Stochastic Differential Equations or SDEs. Song, Kingma, et al. (2021) reworked the whole formulation considering continuous SDE as forward diffusion. Interestingly, it turned out that the reverse process is also an SDE that depends on the score function.

Quite simply, finite time-steps/scales (i.e.

If you have ever studied SDEs, you might recognize that Equation 8 resembles Euler–Maruyama numerical solver for SDEs. Considering

A visualization of the continuous forward diffusion in 1D is given in Figure 3 for a set of samples (different colors).

Song, Kingma, et al. (2021) (section 3.4) proposed few different choices

An old (but remarkable) result due to Anderson (1982) shows that the above forward diffusion can be reversed even in closed form, thanks to the following SDE

Hence, the reverse diffusion is simply solving the above SDE in reverse time with initial state

1. Implicit Score Matching (ISM)

The easier way is to use the Hutchinson trace-estimator based score matching proposed by Song et al. (2020) called “Sliced Score Matching”.

Very similar to NCSN, we define a parametric score network

2. Denoising Score Matching (DSM)

There is the other “Denoising score matching (DSM)” way of training

Remember that in case of continuous diffusion, we never explicitly modelled the reverse conditionals

Since the conditionals are gaussian (again), its pretty easy to derive the expression for

Computing log-likelihoods

One of the core reasons score models exist is the fact that it bypasses the need for training explicit log-likelihoods which are difficult to compute for a large range of powerful models. Turns out that in case of continuous diffusion models, there is an indirect way to evaluate the very log-likelihood. Let’s focus on the “generative process” of continuous diffusion models, i.e. the reverse diffusion in Equation 9. What we would like to compute is

Note that the above ODE is essentially the same SDE but without the source of randomness. After learning the score (as usual), we simply drop-in replace the SDE with the above ODE. Now all thanks to Chen et al. (2018), this problem has already been solved. It is known as Continuous Normalizing Flow (CNF) whereby given

Please remember that this way of computing log-likelihood is merely an utility and cannot be used to train the model. A more recent paper (Song, Durkan, et al. 2021) however, shows a way to train SDE based continuous diffusion models by directly optimizing (a bound on) log-likelihood under some condition, which may be the topic for another article. I encourage readers to explore it themselves.

References

Citation

@online{das2021,

author = {Das, Ayan},

title = {An Introduction to {Diffusion} {Probabilistic} {Models}},

date = {2021-12-04},

url = {https://ayandas.me/blogs/2021-12-04-diffusion-prob-models.html},

langid = {en}

}