Abstract

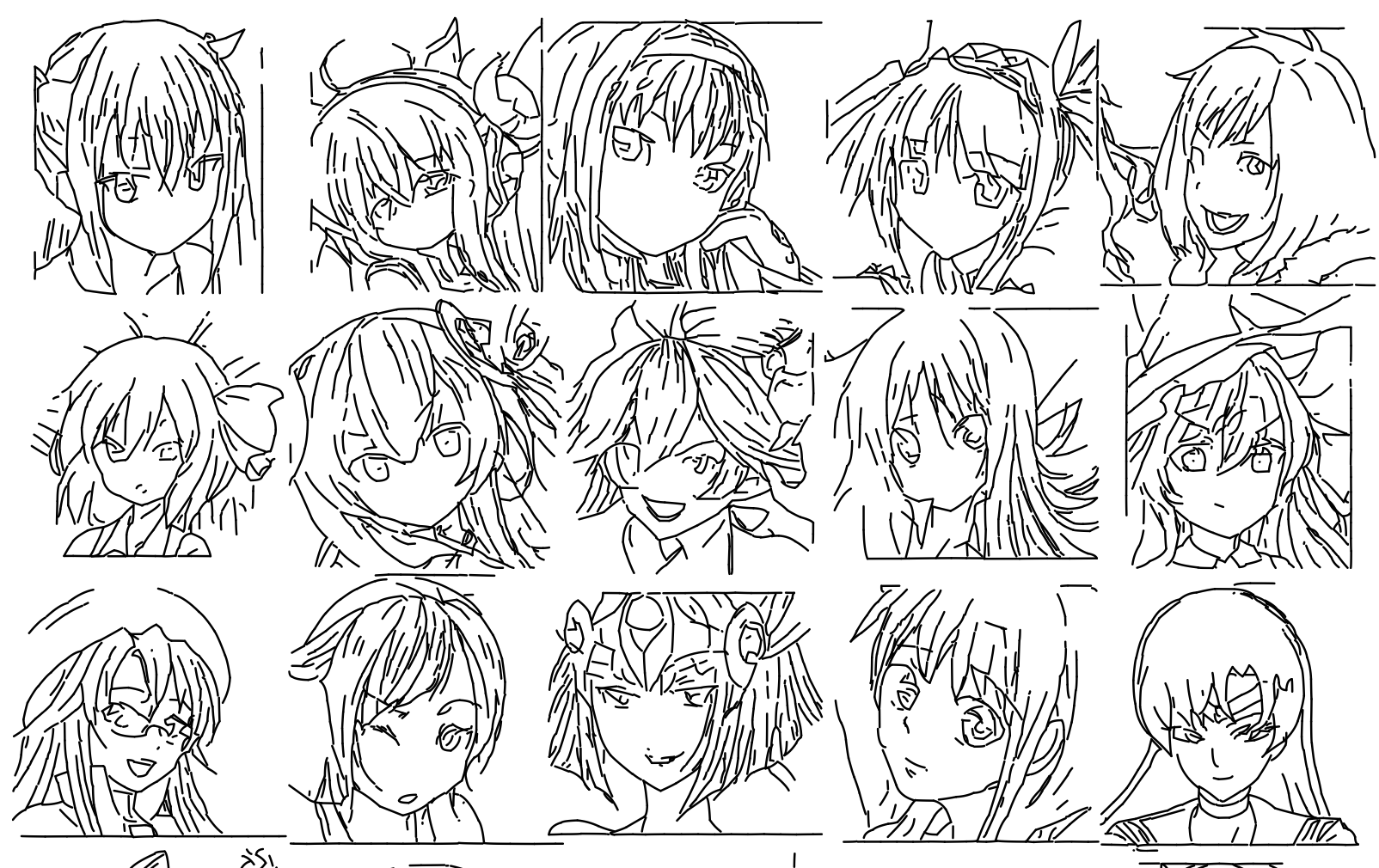

Vector drawings are innately interactive as they preserve creational cues. Despite this desirable property they remain relatively under explored due to the difficulties in modeling complex vector drawings. This is in part due to the primarily sequential and auto-regressive nature of existing approaches failing to scale beyond simple drawings. In this paper, we define generative models over highly complex vector drawings by first representing them as “stroke-clouds” – sets of arbitrary cardinality comprised of semantically meaningful strokes. The dimensionality of the strokes is a design choice that allows the model to adapt to a range of complexities. We learn to encode these set of strokes into compact latent codes by a probabilistic reconstruction procedure backed by De-Finetti’s Theorem of Exchangability. The parametric generative model is then defined over the latent vectors of the encoded stroke-clouds. The resulting “Latent stroke-cloud generator (LSG)” thus captures the distribution of complex vector drawings on an implicit set space. We demonstrate the efficacy of our model on complex drawings (a newly created Anime line-art dataset) through a range of generative tasks.

Please check back later.

Please check back later.

Citation

@inproceedings{ashcroft2024,

author = {Ashcroft, Alexander and Das, Ayan and Gryaditskaya, Yulia

and Qu, Zhiyu and Song, Yi-Zhe},

title = {Modelling Complex Vector Drawings with Stroke-Clouds},

booktitle = {International Conference on Learning Representation

(ICLR) 2024},

date = {2024-03-14},

url = {https://openreview.net/pdf?id=O2jyuo89CK},

langid = {en}

}