Welcome to another tutorial about probabilistic models, after a primer on PGMs and VAE. However, I am particularly excited to discuss a topic that doesn’t get as much attention as traditional Deep Learning does. The idea of Probabilistic Programming has long been there in the ML literature and got enriched over time. Before it creates confusion, let’s declutter it right now - it’s not really writing traditional “programs”, rather it’s building Probabilistic Graphical Models (PGMs), but equipped with imperative programming style (i.e., iterations, branching, recursion etc). Just like Automatic Differentiation allowed us to compute derivative of arbitrary computation graphs (in PyTorch, TensorFlow), Black-box methods have been developed to “solve” probabilistic programs. In this post, I will provide a generic view on why such a language is indeed possible and how such black-box solvers are materialized. At the end, I will also introduce you to one such Universal Probabilistic Programming Language, Pyro, that came out of Uber’s AI lab and started gaining popularity.

Overview

Before I dive into details, let’s get the bigger picture clear. It is highly advisable to read any good reference about PGMs before you proceed - my previous article for example.

Generative view & Execution trace

Probabilistic Programming is NOT really what we usually think of as programming - i.e., completely deterministic execution of hard-coded instructions which does exactly what its told and nothing more. Rather it is about building PGMs (must read this) which models our belief about the data generation process. We, as users of such language, would express a model in an imperative form which would encode all our uncertainties in the way we want. Here is a (Toy) example:

def model(theta):

A = Bernoulli([-1, 1]; theta)

P = 2 * A

if A == -1:

B = Uniform(P, 0)

else:

B = Uniform(0, P)

C = Normal(B, 1)

return A, P, B, CIf you assume this to be valid program (for now), this is what we are talking about here - all our traditional “variables” become “random variables” (RVs) and have uncertainty associated with them in the form of probability distributions. Just to give you a taste of its flexibility, here’s the constituent elements we encountered

- Various different distributions are available (e.g., Normal, Bernoulli, Uniform etc.)

- We can do deterministic computation (i.e.,

- Condition RVs on another RVs (i.e.,

- Imperative style branching allows dynamic structure of the model …

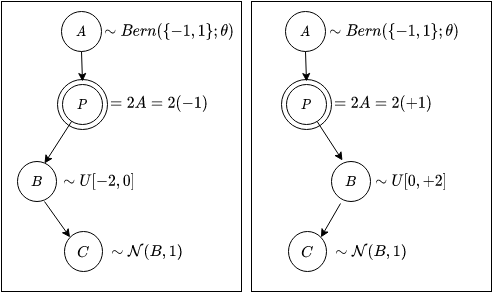

Below is a graphical representation of the model defined by the above program.

Just like the invocation of a traditional compiler on a traditional program produces the desired output, this (probabilistic) program can be executed by means of “ancestral sampling”. I ran the program 5 times and each time I got samples from all my RVs. Each such “forward” run is often called an execution trace of the model.

>>> for _ in range(5):

print(model(0.5))

(1.000, 2.000, 0.318, -0.069)

(-1.000, -2.000, -1.156, -2.822)

(1.000, 2.000, 0.594, 0.865)

(1.000, 2.000, 1.100, 1.079)

(-1.000, -2.000, -0.262, -0.403)This is the so called “generative view” of a model. We typically use the leaf-nodes of PGMs as our observed data. And rest of the graph can be the “latent factors” of the model which we either know or want to estimate. In general, a practical PGM can often be encapsulated as a set of latent nodes

Training and Inference

From now on, we’ll use the general notation rather than the specific example. The model may be parametric. For example, we had the bernoulli success probability

We would like to do two things: 1. Estimate model parameters

As discussed in my PGM article, both of them are infeasible due to the fact that 1. Log-likehood maximization is not possible because of the presence of latent variables 2. For continuous distributions on latent variables, the posterior is intractible

The way forward is to take help of Variational Inference and maximize our very familiar Evidence Lower BOund (ELBO) loss to estimate the model parameters and also a set of variational parameters which help building a proxy for the original posterior

by estimating gradients w.r.t all its parameters

Black-Box Variational Inference

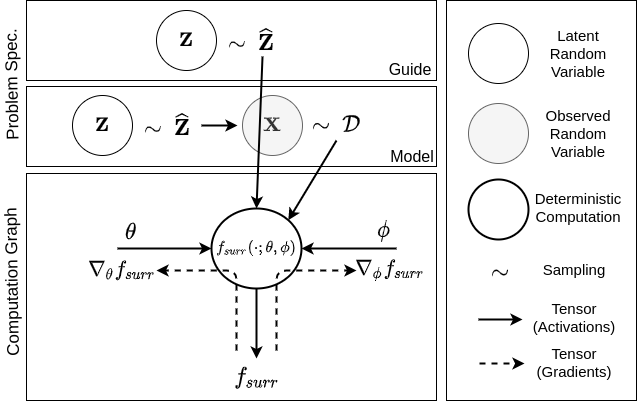

If you have gone through my PGM article, you might think you’ve seen these before. Actually, you’re right ! There is really nothing new to this. What we really need for establishing a Probabilistic Programming framework is a unified way to implement the ELBO optimization for ANY given problem. And by “problem” I mean the following:

- A model specification

- An optional (parameterized) “Variational Model”

- And .. the observed data

But, how do we compute (1) ? The appearent problem is that gradient w.r.t.

Eq. (2) shows that the trick helped the

Succinctly, we run the Guide

The nice thing about Equation (2) (or equivalently Equation (3)) is we got the differentiation operator right on top of a deterministic function (i.e.,

Last but not the least, let’s look at the function

I claimed before that the gradient estimates are unbiased. However, such generic way of computing the gradient introduces high variance in the estimate and make things unstable for complex models. There are few tricks used widely to get around them. But please note that such tricks always exploits model-specific structure. Three such tricks are presented below.

I. Re-parameterization

We might get lucky that

Where

II. Rao-Blackwellization

This is another well-known variance reduction technique. It is a bit mathematically rigorous, so I will explain it simply without making it confusing. This requires the full variational distributions to have some kind of factorization. A specific case is when we have mean-field assumption, i.e.

With a little effort, we can pull out the gradient estimator for each of these

The reason why the quantity under bar still has all the factors because it is immune to gradient operator. Also because the expectation is outside the gradient operator, it contains all factors. At this point, the Rao-Blackwellization offers a variance-reduced estimate of the above gradient, i.e.,

where

III. Explicit enumeration for Discrete RVs

While exploiting the graph structure of the guide while simplifying (1), we might end up getting a term like this due to factorization in the guide density

If it happens that the variable

So make sure the state-space is resonable in size. This helps reducing the variance quite a bit.

Whew ! That’s a lot of maths. But good thing is, you hardly ever have to think about them in detail because software engineers have put tremendous effort to make these algorithms as easily accessible as possible via libraries. One of them we are going to have a brief look on.

Pyro: Universal Probabilistic Programming

Pyro is a probabilistic programming framework that allows users to write flexible models in terms of a simple API. Pyro is written in Python and uses the popular PyTorch library for its internal representation of computation graph and as auto differentiation engine. Pyro is quite expressive due to the fact that it allows the model/guide to have fully imperative flow. It’s core API consists of these functionalities

pyro.param()for defining learnable parameters.pyro.distcontains a large collection of probability distribution.pyro.sample()for sampling from a given distribution.

Let’s take a concrete example and work it out.

Problem: Mixture of Gaussian

MoG (Mixture of Gaussian) is a realatively simple but widely studied probabilistic model. It has an important application in soft-clustering. For the sake of simplicity we assume we only have two mixtures. The generative view of the model is basically this: we flip a coin (latent) with bias

where

def model(data): # Take the observation

# Define coin bias as parameter. That's what 'pyro.param' does

rho = pyro.param("rho", # Give it a name for Pyro to track properly

torch.tensor([0.5]), # Initial value

constraint=dist.constraints.unit_interval) # Has to be in [0, 1]

# Define both means and std with random initial values

means = pyro.param("M", torch.tensor([1.5, 3.]))

stds = pyro.param("S", torch.tensor([0.5, 0.5]),

constraint=dist.constraints.positive) # std deviation cannot be negative

with pyro.plate("data", len(data)): # Mark conditional independence

# construct a Bernoulli and sample from it.

c = pyro.sample("c", dist.Bernoulli(rho)) # c \in {0, 1}

c = c.type(torch.LongTensor)

X = dist.Normal(means[c], stds[c]) # pick a mean as per 'c'

pyro.sample("x", X, obs=data) # sample data (also mark it as observed)Due to the discrete and low dimensional nature of the latent variable

In Pyro, we define a guide that encodes this

def guide(data): # Guide doesn't require data; just need the value of N

with pyro.plate("data", len(data)): # conditional independence

# Define variational parameters \lambda_i (one for every data point)

lam = pyro.param("lam",

torch.rand(len(data)), # randomly initiallized

constraint=dist.constraints.unit_interval) # \in [0, 1]

c = pyro.sample("c", # Careful, this name HAS TO BE same to match the model

dist.Bernoulli(lam))We generate some synthetic data from the following simualator to train our model on.

def getdata(N, mean1=2.0, mean2=-1.0, std1=0.5, std2=0.5):

D1 = np.random.randn(N//2,) * std1 + mean1

D2 = np.random.randn(N//2,) * std2 + mean2

D = np.concatenate([D1, D2], 0)

np.random.shuffle(D)

return torch.from_numpy(D.astype(np.float32))Finally, Pyro requires a bit of boilerplate to setup the optimization

data = getdata(200) # 200 data points

pyro.clear_param_store()

optim = pyro.optim.Adam({})

svi = pyro.infer.SVI(model, guide, optim, infer.Trace_ELBO())

for t in range(10000):

svi.step(data)That’s pretty much all we need. I have plotted the (1) ELBO loss, (2) Variational parameter

The full code is available in this gist: https://gist.github.com/dasayan05/aca3352cd00058511e8372912ff685d8.

That’s all for today. Hopefully I was able to convey the bigger picture of probabilistic programming which is quite useful for modelling lots of problems. The following references the sources of information while writing the article. Interested readers are encouraged to check them out.

Citation

@online{das2020,

author = {Das, Ayan},

title = {Introduction to {Probabilistic} {Programming}},

date = {2020-05-05},

url = {https://ayandas.me/blogs/2020-04-30-probabilistic-programming.html},

langid = {en}

}