Abstract

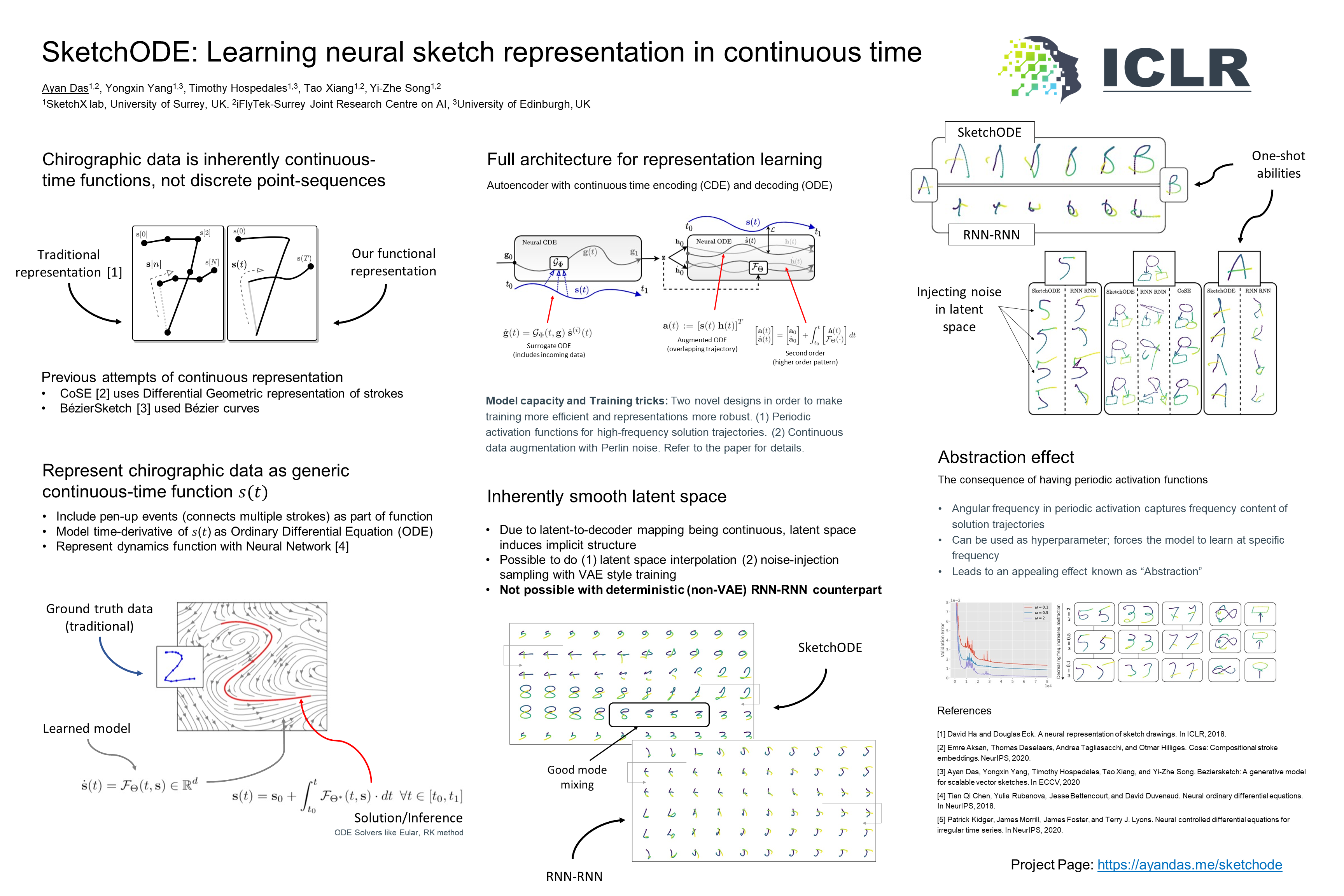

Learning meaningful representations for chirographic drawing data such as sketches, handwriting, and flowcharts is a gateway for understanding and emulating human creative expression. Despite being inherently continuous-time data, existing works have treated these as discrete-time sequences, disregarding their true nature. In this work, we model such data as continuous-time functions and learn compact representations by virtue of Neural Ordinary Differential Equations. To this end, we introduce the first continuous-time Seq2Seq model and demonstrate some remarkable properties that set it apart from traditional discrete-time analogues. We also provide solutions for some practical challenges for such models, including introducing a family of parameterized ODE dynamics & continuous-time data augmentation particularly suitable for the task. Our models are validated on several datasets including VectorMNIST, DiDi and Quick, Draw!.

Citation

@inproceedings{das2022,

author = {Das, Ayan and Yang, Yongxin and Hospedales, Timothy and

Xiang, Tao and Song, Yi-Zhe},

title = {SketchODE: {Learning} Neural Sketch Representation in

Continuous Time},

booktitle = {International Conference on Learning Representations

(ICLR), 2022},

date = {2022-01-21},

url = {https://openreview.net/pdf?id=c-4HSDAWua5},

langid = {en}

}